Your wish is my command! Conversational recommendation systems and a future where we get what we want faster than ever.

How conversation, contextualization, and native interfaces will drive the evolution of recommendations

If you haven’t realized from the plethora of other articles I’ve written on the topic, I’m pretty enamored with recommendation systems.

The idea that we have preferences, whether we’ve offered them directly or they’ve been been inferred, that enable our favorite systems to learn more about us and recommend something back to us is a fundamentally simple idea, but one that has powered hundreds of powerful use cases from finding your partner on Hinge to your favorite neighborhood spot on Yelp.

LLMs (large language models) and VLMs (vision language models) have fundamentally changed the way we can build recommendation systems and today I want to spend some time thinking about how this underlying tech will power the next generation of recommenders.

It’s a pleasure to collaborate with Neel Kulkarni on this article. An email from Neel landed in my inbox a few months ago, full of his thoughts on this space. As we’ve jammed on ideas since, his insights have been crucial to putting this piece together. Now for an intro on Neel to lead us into this week’s article!

Hello! I’m Neel, a rising senior at the University of Virginia, a diehard Celtics fan, and an avid reader of Day to Data. In this week’s edition of Day to Data, I’m excited to be a small part of this piece on recommendation systems and discuss its ability to create powerful consumer products, as well as how AI is continuing to innovate this process to better understand the next generation of users.

The evolution of the recommendation system

Recommendation systems have evolved in terms of how users interact with them:

Surfacing semi-static recommendations to a user through “picked for you” sections of content perhaps based on their preferences up to that day, but likely not in real-time, like Yelp or Netflix.

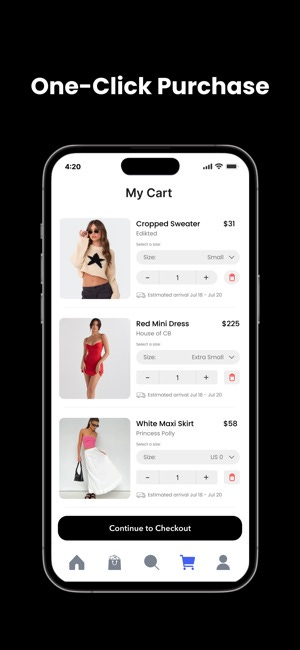

Recommendations powered by how we interact with previous visual or video content, with quick feedback loops iterating on the recommendations in real time like TikTok, Tinder, and Styl.

Hyper-aware and contextualized recommendations that exist within the native interface for an application.

Netflix and the Netflix Prize pioneered an early era of the “Recommended for You” algorithm and interface. Yelp followed suit, making a directory of local spots - from barbers to lawn care to restaurants - and trying to get community reviews to amplify what was best. But people craved better recommendations and more social features driven by people they knew.

I think Yelp walked so Beli could run in version 1.0 of recommendation system interfaces. When we last discussed Beli, the app had 3K app store reviews - today it’s got over 6K. Beli lets users list, rank, and rate restaurants to share with their community of followers. The app recommends your next dining experience by cross referencing your preferences with your friends that have similar preferences. Beli’s been able to see tremendous growth (and likely monetization) through their social media pages, sharing NYC restaurant recommendations to over 800K followers. As they’ve found compelling signs of product market fit, the next steps will likely involve in-app monetization, perhaps through charging restaurants to advertise on the platform, providing or matching customers to restaurants they’ll love through a Seated-esq approach.

In the version 2.0 of recommendations, we got Tinder and TikTok. They profited (tremendously) off our short attention spans and built algorithms that quickly ingested a user’s feedback and updated their desires with every swipe. These gestures of swiping and jam-packed feeds quickly moved out of dating and video creation and into new mediums, like clothing.

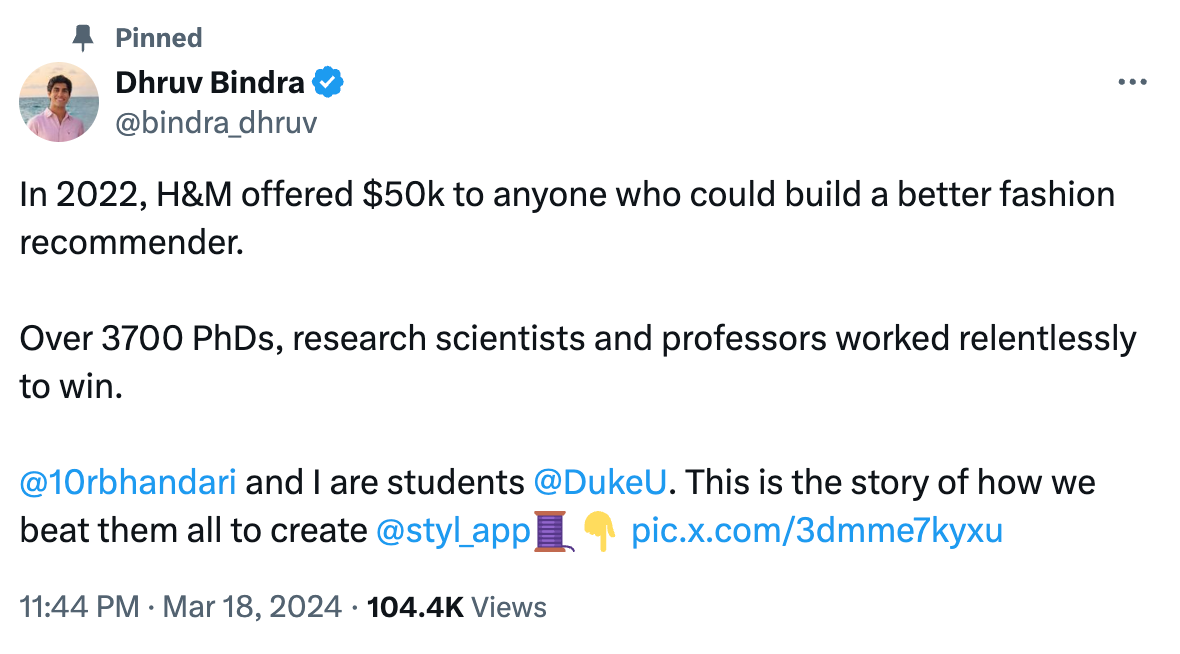

In 2022, as Dhruv shared in early 2024 on Twitter, H&M launched its own version of the Netflix Prize, paying $50K to anyone who could improve the fashion recommenders out there. What unfolded was Styl, which (as of March 2024) has 18k MAUs who have swiped 4.5M times on a piece of clothing. Styl is focused on women’s clothing and best described as “Tinder for Clothes”. The app tracks user’s preferences with every swipe left or right, building a powerful, quick-to-improve recommendation system. Styl’s even tracks trending fashion items at individual colleges across the nation, showing users what their fellow classmates are swiping on most. As for the future of this interface, perhaps they white-label this tech so that every retailer has “Recommendations powered by Styl”, much like what H&M was likely searching for with their 2022 call to action. But for now, Styl is balancing the inundation of fashion with an easily accessible interface that users know and love - the swipe-centric era of recommendation systems — version 2.0.

From natural language to your plans for the night

On to 3.0 and what’s to come. The future of recommendations will not look like those we know today.

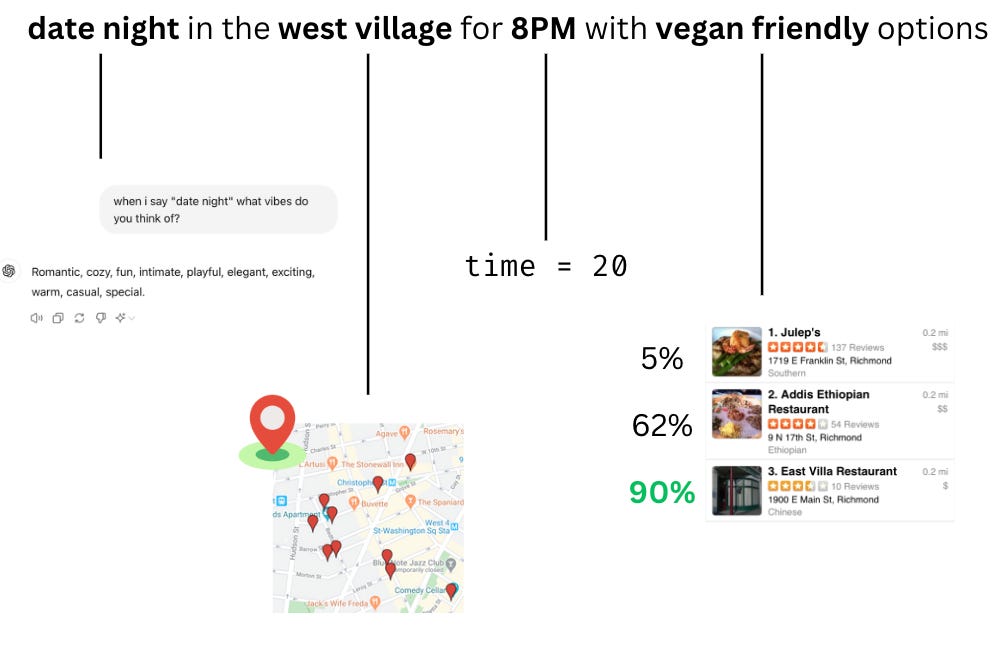

Large language models provide an unbelievable unlock when it comes to understanding how to take strings of text data and truly understand what’s being conveyed in the natural language. “Date night for two in the West Village” can now lead to a booked reservation at Balaboosta for 7PM this Friday (a must try!!). The step-change improvement is in using an LLM to break down topics and context from a given piece of text, versus previous methods like Named Entity Recognition (NER). If you break it down, it can be represented like this for a given prompt: “date night in the west village for 8PM with vegan friendly options”

The unlock of LLMs means that recommendations no longer need to live in silos, based off a handful of datasets, and be unilateral. Recommendations can take a variety of styles of inputs in natural language and give on-demand recommendations.

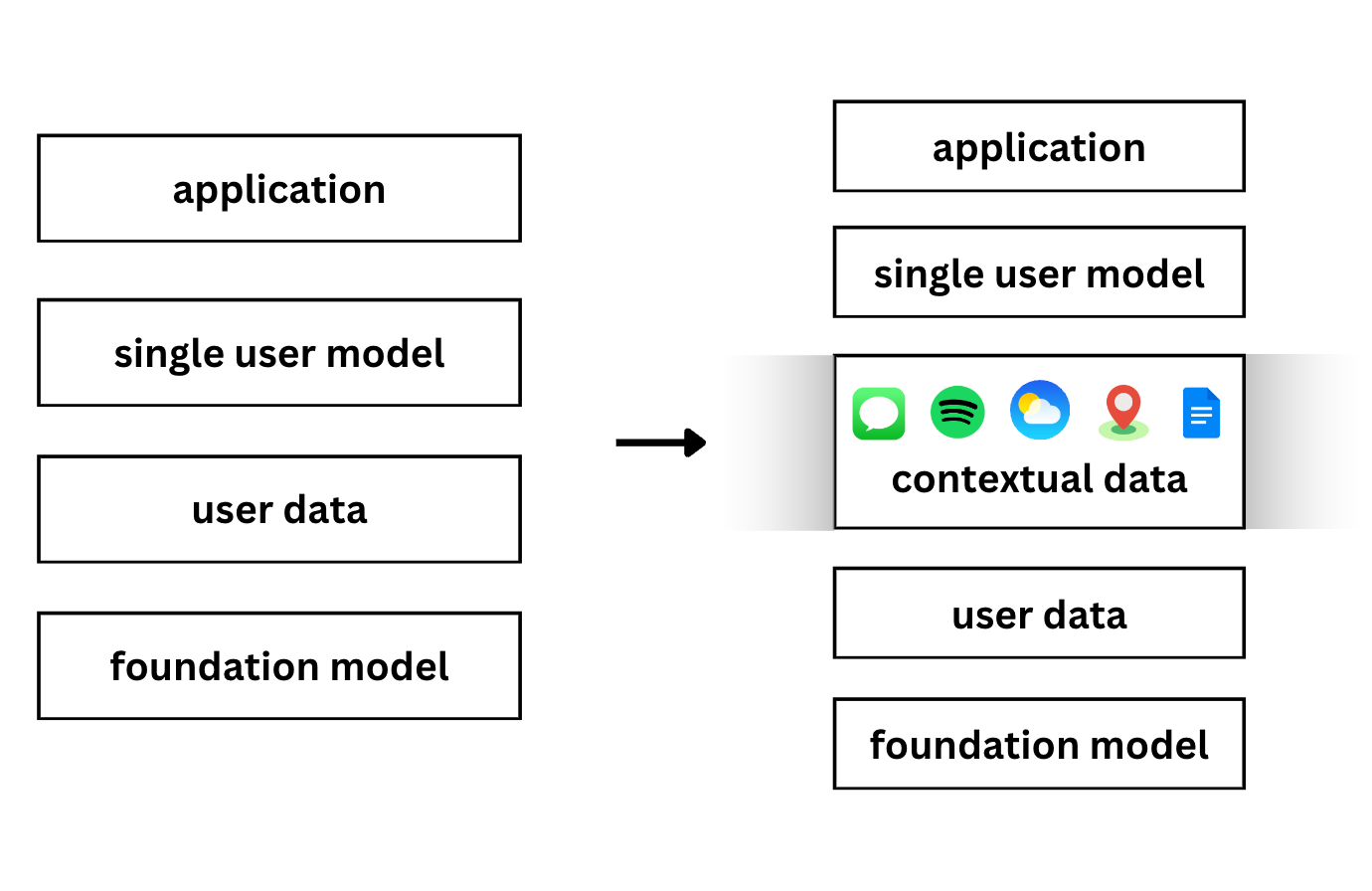

I’d even venture to say that we’re actually no longer discussing recommendation systems, but rather the future of a hyper-aware, context-rich environment that can help us make decisions. If you power a system with not just user data, but contextual data from the surrounding environment, the model that powers that user’s end application becomes all the more valuable. We can inject contextual data - apps, sentiment, emotions, etc. - into the infra stack of the future for recommendations.

There’s tremendous opportunity. Here’s what we’re excited for:

Recommendations will be hyper-contextualized by data from apps in the surrounding environment.

Recommendation systems becoming conversational and more like the way humans navigate decision making, like Where Should I Eat NYC.

Improved technology around translating input into preferences that better reflect the user’s intentions and goals, like Claro and Honcho

Embedding recommendations into native, lower-level systems, living collaboratively amidst all your apps and becoming a real human-computer interface like Asta and what much of Apple Intelligence will likely feel like.

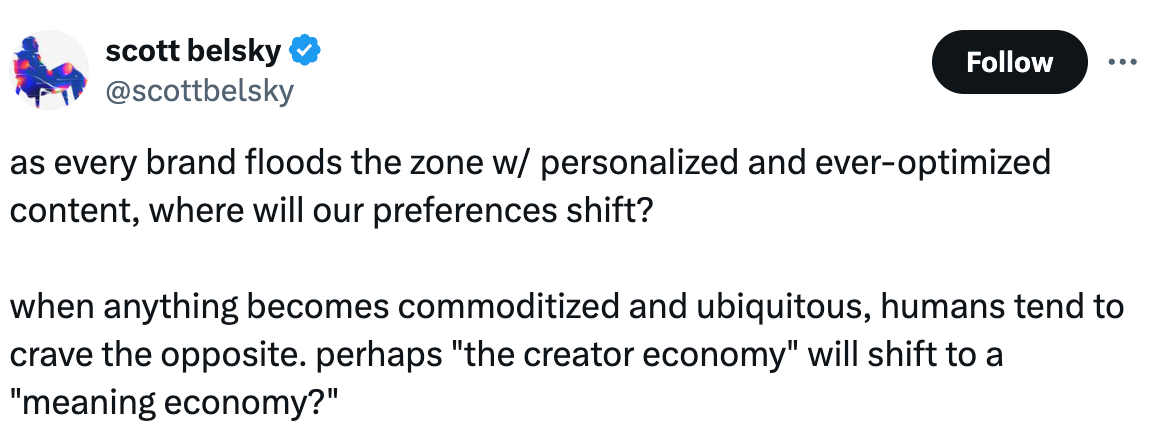

To close out with a “red-team” on this perspective, perhaps over-optimization will result in undesirable outcomes. In a tweet from Scott Belsky of Adobe:

Recommendation systems just seem to be the one topic I can’t get enough of! We’ll see how this one unfolds and report back with our learnings. One more big thanks to Neel for collaborating on this article, and thanks to you all for reading!

Balaboosta!

I struggle to understand the recommendations I get on Netflix. Often I check the trailers and find the idea wanting. What they really need is a way to make trailers informative but not too revealing. The real genius apparent in making and marketing movies is the editor pricing the trailer, enough to make you interested but not so much as to make you feel that you know too much before you start watching.