What even is a GPU?

The layman's guide to the history of one of the most sought after commodities in our world today.

Welcome to Day to Data!

Did a friend send you this newsletter? Be sure to subscribe to get a weekly dose of musings by a data scientist turned venture investor, breaking down technical topics and themes in data science, artificial intelligence, and more. New post every Sunday.

Today, we’re talking GPUs. If you are working in or tangentially to a tech role, you’ve probably heard this word more than you expected to in the last year. But no matter what you do, GPUs are important to understand. I promise not to get too technical and I hope you’ll walk away having learned something new. Let’s dive in.

A quick caveat: There’s about 1000 ways you could tell the story of the GPU evolution. The following is a story that I hope teaches you a bit, keeps it light, but shares the quick hits on how this piece of technology has evolved.

A global, political technology

Since the invention of the integrated circuit and early winners like Fairchild Semiconductor, chips have been an incredibly important technology. They’ve impacted global supply chains and secret intelligence organizations for decades. In the 1950s, American spies were ordered to obtain and analyze Soviet semiconductors to see where how Soviet progress compared1. Later in the 60s, when Texas Instruments wanted to open up a plant in Japan, Sony’s Akio Morita told TI executives to travel incognito, use false names, and never leave their hotel, while he navigated bureaucratic hurdles to bringing an American company to Japan2. I could go on. But I think it’s important to set the scene that as chips have grown increasingly more complex, the global tensions surrounding the procurement, fabrication, and manufacturing of them has not halted.

CPU boom

Central processing units (CPUs) were a specialized type of integrated circuit that catalyzed computers and consumer devices, like transistor radios. CPUs were the brains of computers, and as the United States realized computing was going to be a big part of our future, CPUs became a key piece of tech. In one deal in the 1960s, Burroughs, a computer company, bought 20 million chips from Fairchild. By 1968, Fairchild served 80% of computer market.3 These were a hot commodity. But things were just getting started.

Key point #1: CPUs, a specialized integrated circuit for computers, act as the brain of a computer.

You’re in an arcade in the 1990s…

And playing the latest game on the Namco arcade system, released in 1979. Companies in the video game space are working hard to make game visuals better, more colorful, clearer, to be more engaging. For most of the 1990s, 3DFx and their Voodoo 1 graphics card was the best in class solution, owning an estimated 85% of the market. However, later in the 1990s, a company released the GeForce 356, and declared it was the first “graphics processing unit”, which resulted in incredible picture quality, major leaps for 3D gaming, at a relatively affordable price. That company is called Nvidia.

Key point #2: The early GPU was born from the gaming industry.

How were GPUs able to make such dramatic improvements?

A massive shift in computer architecture was underway in the research community in the 1970s to 1990s. Parallelism was born out of an increased demand on computers to do more at once. Parallelism refers to the ability to run several processes simultaneously. CPUs executed tasks one after another, through serial processing. GPUs became incredibly powerful when they were able to execute tasks in parallel. This meant faster processing speeds, more data processing, and increasing complexity enabling better visuals, faster computations, and other end improvements.

CPUs and GPUs have different purposes, and both are very important still today. GPUs have simply grown more popular as their ability to execute multiple calculations at once is particularly helpful for training large language models.

Key point #3: The major improvements the GPU was able to deliver were heavily dependent on parallelism.

Ok, so what does a GPU do?

GPUs receive data from the computer’s memory, analyze and optimize the data, then return it back. Early GPUs were typically receiving information about an image, then enhancing the image and making the graphics appear more smooth and high quality, then sending the enhanced data back to the screen.

For training an LLM, a GPU is capable of receiving large amounts of text data. The text data is made machine readable, by turning words into numeric representations. The data is then batch processed by parts of the GPU called cores. It can process many batches at once thanks to parallelism. As the data is processed in parallel, the LLM is being optimized with each batch. There is memory within the GPU to store intermediate data points as the model is being trained. Developers can use tools like TensorFlow to properly distribute data across a GPU and maximize efficiency.

If you want to do a tech deep dive — this lecture from CMU was incredibly helpful.

Key point #4: GPUs can batch process massive amounts of data, with applications typically in graphics or large language models.

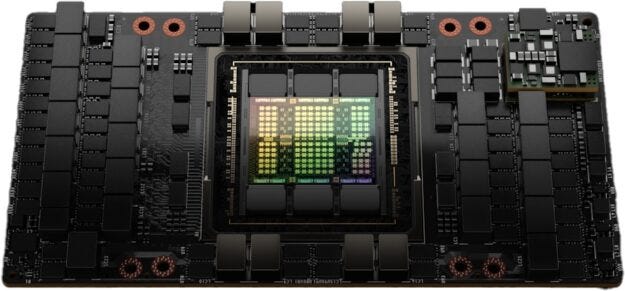

The H100.

A bit on the H100. Nvidia launched the H100 GPU in March 2022. This GPU was game changing in its latency (speed) improvements, performing multiples better than state of the art hardware at the time. The price tag? On average, $30,000 per H100. Nowadays, it would likely take someone months if not years to get their hands on one. Everyone’s on the prowl for some compute.

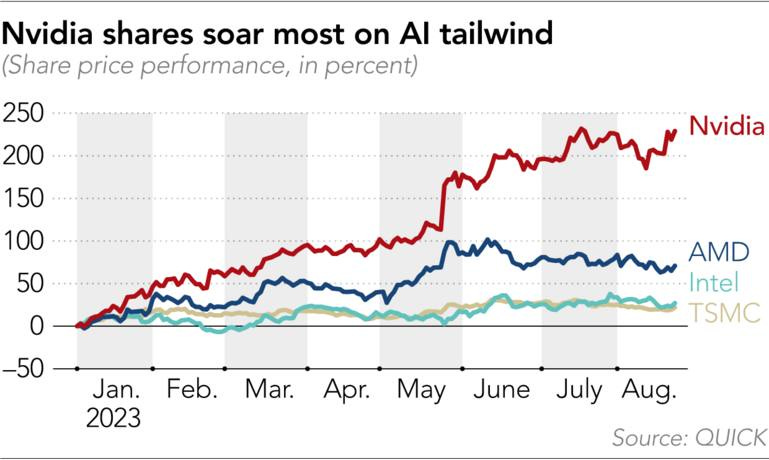

Now’s a good time to call out an arguably more important player in the semiconductor biz — TSMC. Taiwan Semiconductor Manufacturing Co is the world’s largest chipmaker. However, they don’t make their own designs, but rather those of their clients, which includes Nvidia. They’ve surely benefited from the AI boom, but Nvidia has seen egregiously outsized stock performance versus other key players - including AMD and Intel. Nvidia’s unparalleled success might have something to do with a little something called CUDA, the software layer they’ve built on top of their products that allows engineers to modify their instructions and processes. More on that in an entire newsletter later.

The state of GPUs

From the State of GPUs by Latent.space, some quick facts about the potential environment for GPUs in the next year:

NVIDIA is forecasted to sell over 3 million GPUs next year, about 3x their 2023 sales of about 1 million H100s.

AMD is forecasting $2B of sales for their new MI300X datacenter GPU.

Google’s TPUv5 supply is going to increase rapidly going into 2024.

Meta is putting an estimated 100,000 NVIDIA H100s online

The market is just going to keep growing. Demand will grow. Supply will also grow. RunPod and loads of other startups are leaning into serverless offerings. Startups offering fractional GPU usage like Coreweave will be interesting to watch as they react to the evolving market. There is going to be so much change in the next few years in the GPU and hardware space. Buckle up, y’all.

That’s a wrap… for now.

As someone pretty entrenched in this space, sometimes it feels like it is a GPU world and we’re all just living in it. For me, learning about the people, history, negotiations, deals, and tech makes it all much more human and real. And looking back at the hockey stick moments for GPUs gives a fresh perspective to analyzing the future. Hope you learned a bit in this week’s post! See y’all next week!

Chip War by Chris Miller

Chip War

Yep, Chip War

Reads exactly like a conversation we had in San Diego. Still both interesting and well above my pay grade, a world my 1973 college grad mind has difficulty grasping.