A software girl in a hardware world

With thoughts on cooling in data centers & why it's so important

Earlier this month, I went to Supercomputing, a conference focused on high performance computing, networking, storage and analysis. In layman’s terms — hardware & software for crunching numbers. It was Disneyland for data centers. I’m going to share some of my learnings from the conference and do a deep dive into one of the hot topics — cooling.

SC24

For a bit on Supercomputing — the conference took place in Atlanta during the third week in November. The massive exhibition hall brought together over 18k attendees, with a tilt towards academics and folks working in the HPC ecosystem (data center owners and operators at computing companies). I arrived on Wednesday and could noticeably tell I was joining at the mid-point, only due to the raspy, lost voices of folks at their booths. It was a bustling atmosphere, full of curious folks that I was happy to be amongst.

A hardware world

As someone who spends most of her time in software, Supercomputing threw me out of my comfort zone. I spent my time navigating through booths full of server racks, cooling tanks, and power systems. It was pretty incredible.

The big companies had pulled out all the stops. Nvidia had a booth with 20+ employees demoing the state of the art GPUs and what folks are using H100s and Blackwell to build. Vertiv had their modular data centers out for demoing. Among the hardware, there were even gelato stations, coffee carts, and tacos.

There was a few major themes that came out of SC24 for me, both from sessions I attended and conversations I had with other attendees. To highlight a few…

Data centers at the edge: Companies like DUG are deploying shipping container sized data centers, complete with power, cooling, and connectivity, in locations on the edge. Interest appeared to be largely from folks in research (think trying to do computationally heavy work in a remote location).

Energy efficiency is top of mind: across several of the panels was a shared sentiment that as exciting as AI is, it is putting pressure on companies to stay in check with their energy consumption. Read more of my thought’s on this in a past article here.

High performance computing is for more than just AI: While AI was the darling of the event, powerful hardware is being used for traditional machine learning, from mathematics to medicine, and robotics to agriculture.

The national labs are not messing around: an impressive showing came from the folks at the national labs like Livermore and NREL. Livermore launched their exascale supercomputer, El Capitan, which has recently been declared the world’s fastest supercomputer.

Honorable mention — Bitcoin mining centers turning into AI hubs: Yep. Bitcoin mining centers are trying to pivot their operations to host more AI workloads.

Far outshining all these topics was one theme — computers are getting really hot, and we need to do something about it. Let’s dig into what’s happening in the data center and the companies racing to cool things down.

Things are getting hot

Just as your laptop heats up as it’s overworked with processes, all computing hardware will generate heat while it’s running.

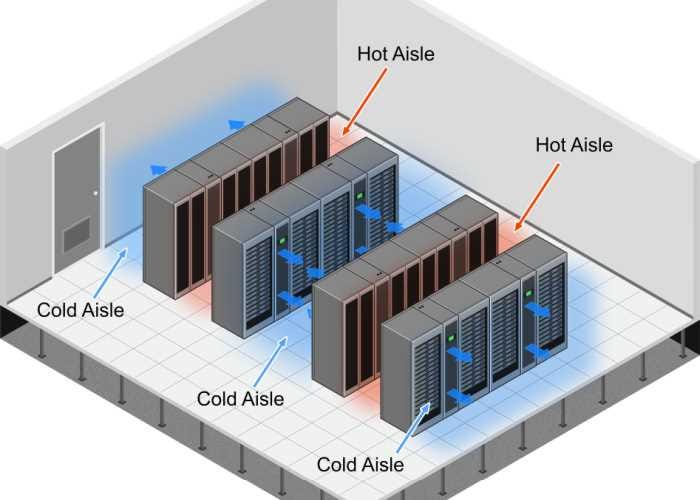

GPUs and CPUs today will have parts that help regulate temperatures, similar to the fans that you can hear as your laptop overheats. In addition, data centers use air cooling to keep servers cool, setting up “hot aisles” where the computers push their excess heat, and “cold aisles” where cold air is pulled into the server racks. As you can see in the image below, the floors are raised to deliver cold air from all angles.

This set up has been sufficient, but most definitely won’t work for the next decade with AI workloads. Why’s that? The power density of GPUs is increasing. GPUs are delivering more computational power (typically measured in teraflops, which is essentially how many operations a GPU can perform in one second) per unit of physical area or volume of the chip. While this means that for the same size GPU, you get more processing power, it results in a host of challenges. The main implications are keeping these systems cool and complexity of manufacturing. I’ll save my thoughts on the ladder for another day.

We’re hitting the power wall

Nvidia’s H100 demands about 700w per processor. Their latest Blackwell will pull in about 1kW. On a single processor scale, this might not seem huge, but when you’re filling a data center with thousands of these processors, the power difference is real. Blackwell’s rollout is reportedly slowing due to overheating in the 120kW racks. Per Tom’s Hardware, “these problems have caused Nvidia to reevaluate the design of its server racks multiple times, as overheating limits GPU performance and risks damaging components. Customers reportedly worry that these setbacks may hinder their timeline for deploying new processors in their data centers.“

And we need solutions

Nvidia’s Blackwell can only achieve peak performance if liquid cooled. Everyone’s in need of solutions to address the overheating problem. There’s a few flavors of how cooling can work, including but not limited to:

Air cooling: typically room based or rack based (as described above with hot and cold aisles)

Underfloor cooling: raised floors to give room for ducts to deliver cool air to servers from below

Direct to chip liquid cooling: Delivers coolant directly to the CPU or GPU heat sinks via tubing.

Immersion cooling: Entire servers are submerged in a non-conductive coolant fluid. Can be single phase (where coolant stays liquid) or two phase (where coolant goes between liquid and vapor)

The first two types are table-stakes, but the second two are where the industry is heading. Each type of cooling has its own slew of necessities from the data center, like increased access to water or different maintenance requirements.

There are a few companies worth having on your radar that are building for the cooling market.

Submer: The Barcelona-based company recently raised 50M euros to scale their immersion cooling systems. As shown below, Submer builds tanks where servers sit in a bath of coolant. The tanks are big and require a re-thinking of data center architecture before they are installed, due to different water, fluid and space requirements. Submer makes their own immersion fluid, but is interoperable with those from Shell or ExxonMobil.

CoolIT: the company, acquired by KKR and Mubadala for $270M in May 2023, provides cooling systems that deliver liquid coolant directly to processors that are generating heat. As shown below at right, they build “coldplates” which sit in a low profile on top of a processor. CoolIT also builds rack level solutions, in the form of liquid-to-air coolant distribution units, shown below at left.

I’d suspect that these are two companies that you haven’t heard of, that are building incredible hardware and will be benefiting tremendously from the AI boom.

We’ve got a lot to build

There’s a lot of value to be captured in software thanks to AI. However, building that software requires a massive physical infrastructure built out in order to meet demand. Supercomputing was a view into the world that’s behind the scenes — the processors, wires, cooling tanks, and more that underpin the software-level success everyone’s running after. And we’re just at the beginning.

Thank you for reading this month’s Day to Data. Just a slice of what I’m thankful for this Thanksgiving week - thanks for being a part of it! See you all in December.

This is awesome

Written by the coolest non nerd operating in data rich world, making the world a cooler, better place. AI and heat may get the attention but it’s energy and staying cool that drives change.