my running watch has too much confidence in me.

but it's a machine learning model so it must be right?

Today we’re talking about predictive fitness analytics, and how I strongly believe my running watch is too confident in me. I hope you’ve enjoyed day to data so far. If you’re not yet subscribed, join the fun by clicking below.

my watch has too much confidence in me

If you’re an athlete, you may use some device to help track your progress and calculate metrics based on your vitals to indicate performance. For runners, these devices usually exist in the form of a running watch, maybe a heart rate monitor, or an app on your phone. For me, my Garmin watch is just a part of me now. The company has become a mainstay in the running community as a leader in watches with accurate metrics, both GPS and biometric based.

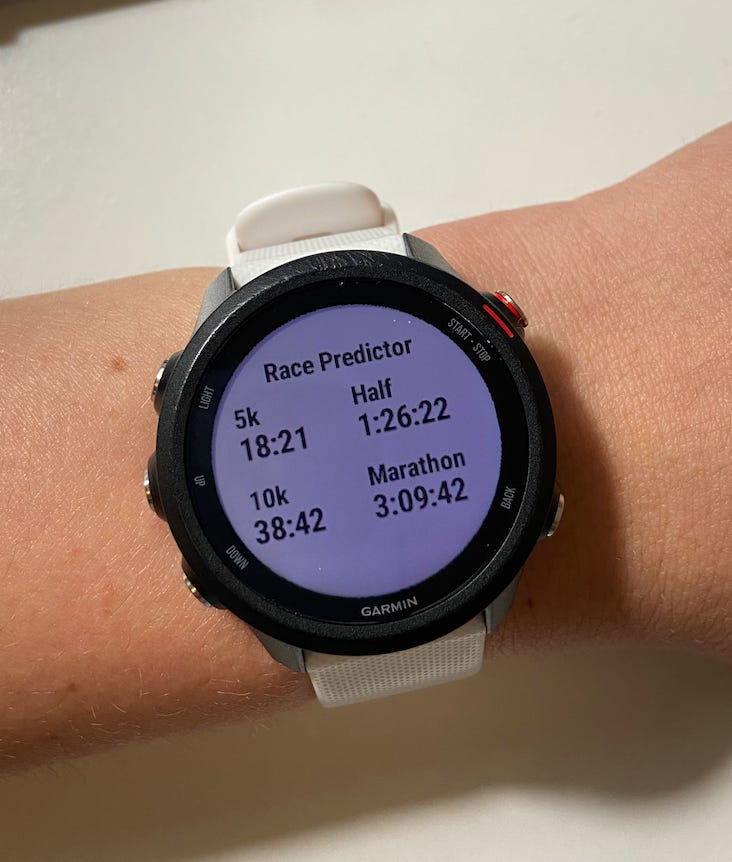

If you’re a Garmin user, as you scroll through your watch, you’ve probably come face to face with a screen I have a love-hate relationship with: Race Time Predictions. Garmin has partnered with Firstbeat - a Finnish company that specializes in “advanced performance analytics for stress, recovery, and exercise” - to provide predictions for athletes for their 5k, 10k, half marathon and full marathon race times. A lot of days I look down at my watch and wonder, “why does my Garmin have so much confidence in me?”. Today we will dive into technologies and innovations behind predictive fitness measures like these and learn more about where all that confidence comes from.

what’s on the market

It is getting easier and easier for runners to interact with powerful data science models that can help empower us to train harder, run faster, and run longer. And it’s not exclusive to runners. All areas of personal fitness, with the growing use of wearables like Garmin watches, Apple Watches, heart rate monitors and more.

Strava’s Fitness Score is based off of “Relative Effort” (measured by either heart rate, or user perceived effort) and/or power meter data that enables Strava users to see how they are doing today compared to their fitness in the past

Freeletic’s Bodyweight Coach learns from the app’s users behavior to become a tailored, machine learning driven coach in your pocket.

RaceX, the official predictive analytics of USA Triathalon, provides users optimized race execution plans based off their training history.

These companies are building just a sample of the thousands of predictive fitness models that are integrated in the products athletes are using every day.

how they’re predicting our fitness

From past posts, you’ll know that models rely on training data. The more training data, the better. If there’s one thing the wearables market truly helped explode is the amount of data companies are able to gather on the health and wellbeing of the users. Athletes went from being able to punch in a few numbers in an app to log their miles for the day to being able to know their heart rate, cadence, and exertion at second-by-second intervals over the course of a workout.

This explosion of data points has allowed the predictive fitness industry to explode. With the pandemic, there was an absolute boom in the sports and personal fitness sectors. Strava, the leading social platform for athletes, saw 33% increase from 2019 to 2020 in number of uploads to their application. These numbers have showed no signs of slowing, with a 38% increase from 2020 to 2021. The wearables market has existed for some time, but wearables plus the explosion of fitness logged using such devices truly has allowed for the data to scale predictive fitness measures.

garmin predicting some ambitious race times

For those unfamiliar with the feature, here’s a peak at what my running watch is showing me at the time of writing this article. Looking at these times, as a 3:36 marathoner and someone who’s only broken 20 minutes in the 5k a handful of times, these estimates are a stretch for me. I’m always up for a challenge, but where is Garmin coming up with these numbers?

There’s usually a good bit of mystery behind the real machine learning models powering the predictions we actually interface with as a user (which is something as a data scientist I always find frustrating). If we check out Garmin’s FAQ, we learn:

The watch will use VO2 max. estimate data and your training history to provide a target race time. The watch analyzes several weeks of your training data to refine the race time estimates.

The projections may seem inaccurate at first. The watch will require a few runs to accurately provide an ideal prediction. These times are just predictions and do not factor in variables such as weather, course difficulty, or training regimen.

From this, we can read a bit about their features - VO2 max and training data - as well as their target variable - race time estimates, and their disclosure that factors such as weather, course difficulty, or training regimen are not included in the model’s outputs.

With a simple search of Github, you can find several attempts made at reverse engineering something like this. If you’re a Garmin user, you could even download your personal activity history to build a model tailored to your history.

I want to use this example to call out a foundational element of data science model development - feature engineering. Feature engineering is the process of making a dataset “better suited to the problem at hand”. Let’s explain through an example using our running training data as a dataset.

If I have a dataset containing my date of activity, age, pace, time, distance, maximum heart rate for that run, minimum heart rate for that run, and average heart rate for a single instance of a run as features in a training dataset containing hundreds of runs, I could use feature engineering to extract more value from a small set of features. How? As a runner (applying my domain knowledge!), I know that I am getting fitter when I can tackle the same pace and distance run at a lower average heart rate. If I know my maximum heart rate (typically something known by age of the athlete), and I calculate:

average heart rate for this run / max heart rate as an individual for my age

Then with this new calculation, I can see how this number changes over the course of my training (ie has the avg HR / max HR for a run of the same pace and distance gone down over time?). I now have used the existing features to create a more powerful metric that tracks my heart rate variability. Feature engineering is just one of many skills in the data science toolkit, and something that you can always learn new methods and apply new domain knowledge to improve.

For a crash course on Feature Engineering, I’d recommend this course on Kaggle.

Thanks for reading this week’s post. I’m excited we’ve officially reached 9 posts for the year! More to learn and more to write about. See you next week.