An ode to the Jupyter Notebook

The history and tech behind one of data science's most fundamental tools.

Welcome to Day to Data!

Did a friend send you this newsletter? Be sure to subscribe here to get a post every Sunday covering the technology behind products we use every day, rising trends in tech, and interesting applications of data science for folks with no tech background.

If you have done any Python coding, you probably know about a little something called the Jupyter Notebook. Whether you’re familiar or not, you’ll get to learn a bit about its origin story and the tech behind one of the most commonly used tools in data science!

A bit of history

In 2001, UC Berkeley professor Fernando Perez started to build tools to analyze data in a easy and collaborative way. The Jupyter Notebook is just one of the tools that was launched by Project Jupyter.

The existing tools for coding workflows didn’t mirror the trial & error methods of science that researchers were used to. Perez wanted to mirror the interactive and exploratory nature of science, beginning with breaking up code into smaller, modular sections that would enable developers to test, explore, and iterate quicker. Additionally, it was hard to share code with people who weren’t working on your project at the time.

In the late 1980s, Wolfram Mathematica and Maple released front-end and kernel based notebooks, which is similar to the architecture Jupyter would build upon. In 2008, Github was started. It wouldn’t be until 2011 that IPython would release its notebook. Jupyter then spun out of IPython in 2014, and the Jupyter Notebook was introduced to the world.

The Jupyter name came from the languages it supported: Julia, Python, and R.

What made Jupyter Notebooks so impactful

Sharing code and collaboration was always been known as an accelerant to innovation. In 1984, Donald Knuth introduced literate programming, which is: “a methodology that combines a programming language with a documentation language, thereby making programs more robust, more portable, more easily maintained, and arguably more fun to write than programs that are written only in a high-level language.” Literate programming was a step towards making code easily digestible, modular, and able to be engaged by a non-technical crowd.

We’ve talked about open source and the rate at which the open source community makes improvements, find bugs, etc. And open-source would be next to nothing without Github, but early collaborative programming would be nothing without the Jupyter Notebook. An article published in Nature declared that the code for Jupyter Notebooks was one of ten that transformed science (as of Jan 2021, pre major AI boom).

I also see Jupyter Notebooks as a way to lower the barrier to entry for new programmers. Getting started as a programmer can be intimidating. It can be hours of debugging just to make an off-the-shelf Macbook ready to compile code in many languages. With a Jupyter notebook, your time to value is dramatically shortened, as the kernel and interface is really easy to set up. Even more so, the .ipynb file type could be shared with others who could read your code, make edits, and help improve your work. There’s an estimated 10 million Jupyter notebooks on Github alone. Jupyter Notebooks have been a major driver in innovation, from drug discovery to computer vision. Researchers have discovered some pretty incredible stuff due to the collaborative nature we love from Jupyter notebooks.

The tech behind the Jupyter notebook

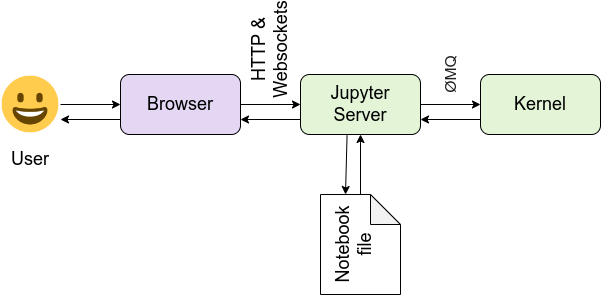

There are two fundamental parts to a Jupyter notebook: 1) a kernel and 2) a front-end browser page that looks like a notebook. These pieces work together to make an easy experience for developers. Here’s a high level on the architecture - let’s break down the parts.

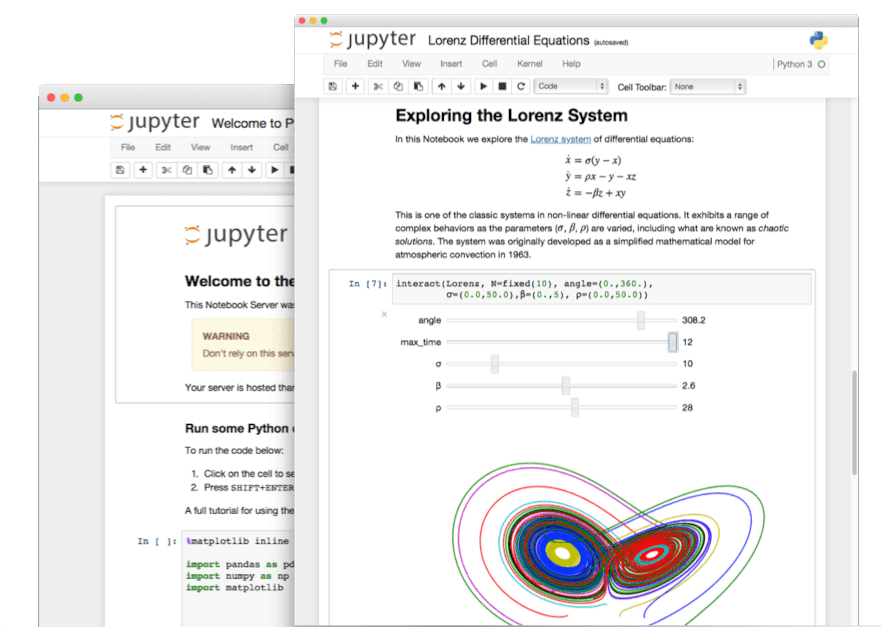

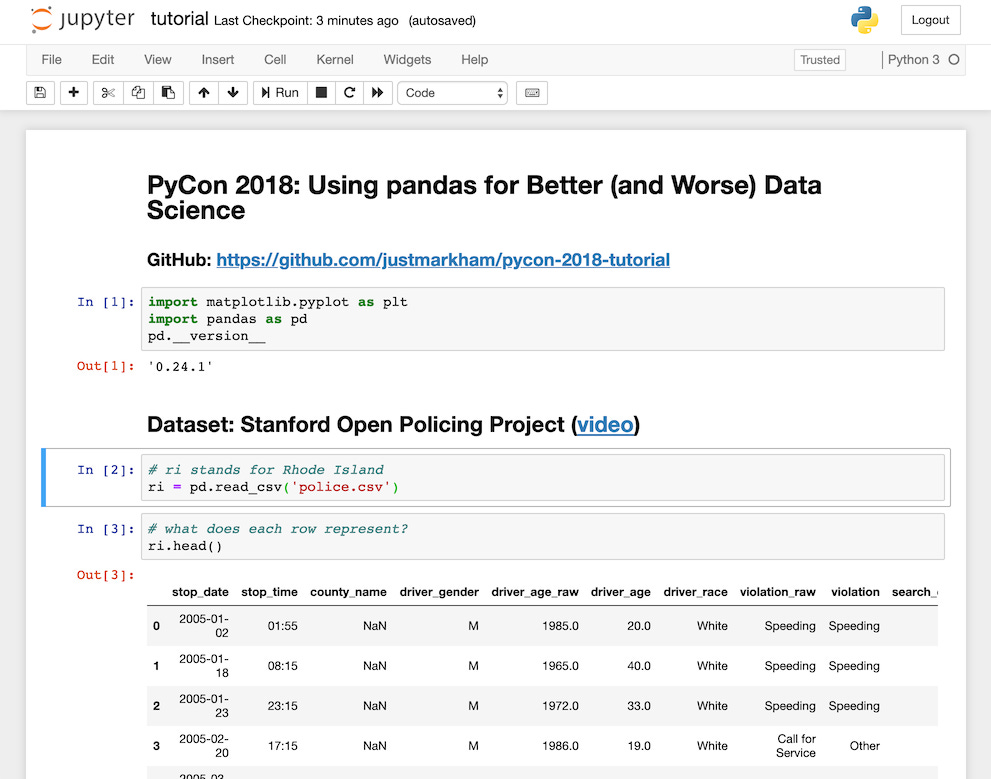

First, the notebook. The user is interacting with the notebook through the Jupyter Server, which is saving all changes to a notebook file, with the extension .ipynb. See below for a screenshot of what a Jupyter Notebook looks like. There’s some main features that make it incredible for programming:

Cell-based structure to code development. Each “cell” is a block of code or Markdown text that’s been added by the programmer. Despite cells being independent, since they are all run by the same kernel (more details later), one cell know about the values and definitions in other cells.

Markdown text functionality allows for authors to add comments and documentation to help walk collaborators through the code

In-line rendering of interactive charts and graphs

Next, the kernel. A kernel is a pretty standard term used in computing. Simply, the notebook kernel will receive the code snippets a user writes, interpret the code, execute the code snippets, and return the result.

When you open up a Jupyter Notebook, it will connect to a kernel that provides compute for the code you’ll write in a notebook. The kernel contains information on how to run the language specific code (ie Python vs. Javascript). It maintains the value of the variables you’re declaring in your code, which lets a user write new snippets of code that are dependent on the code that’s been run before it. Kernels also can be shutdown or interrupted, which can be helpful to debug your code or stop processes that are taking too long.

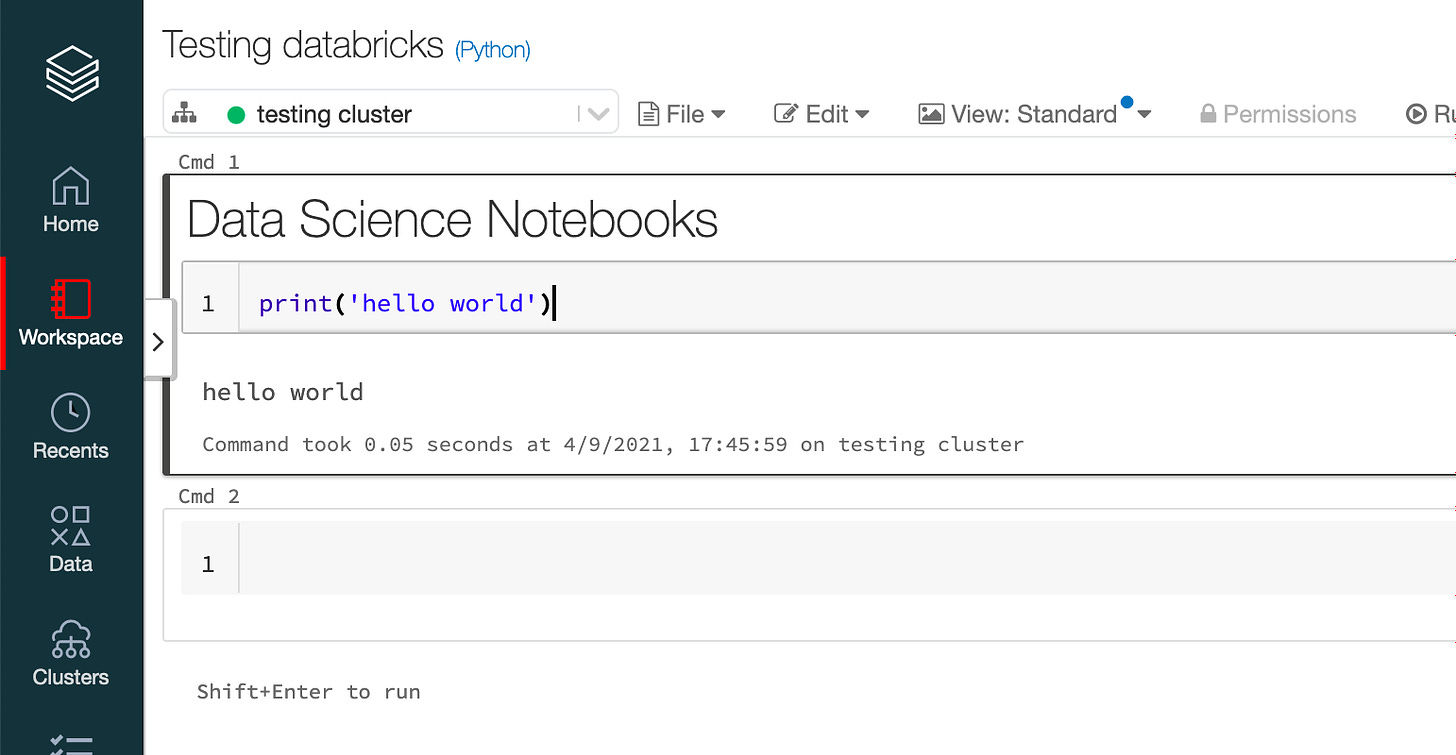

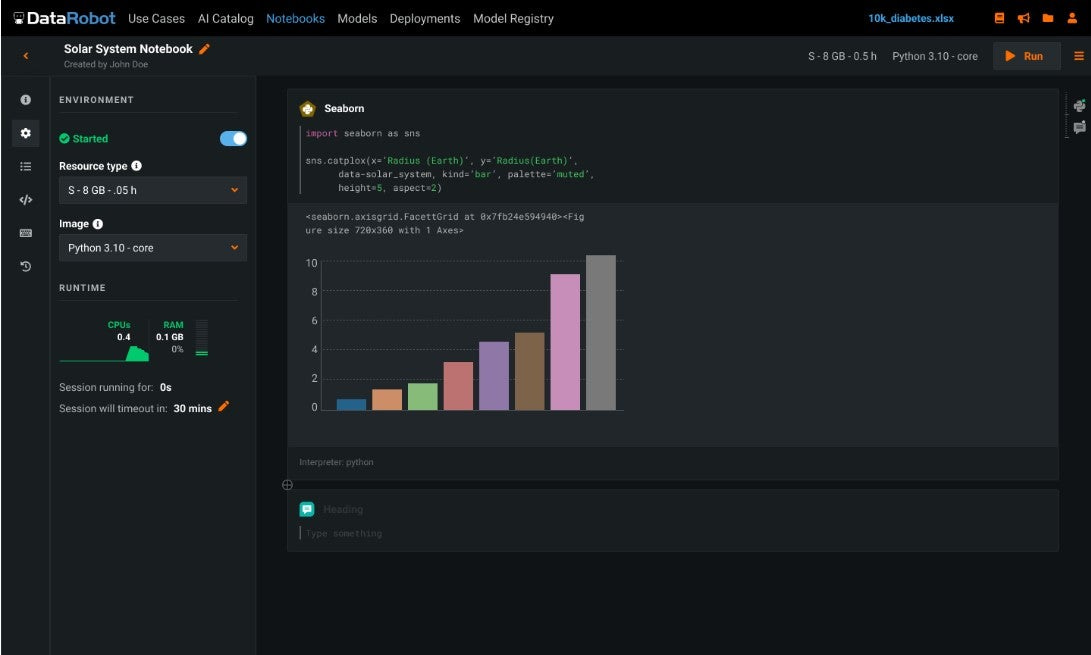

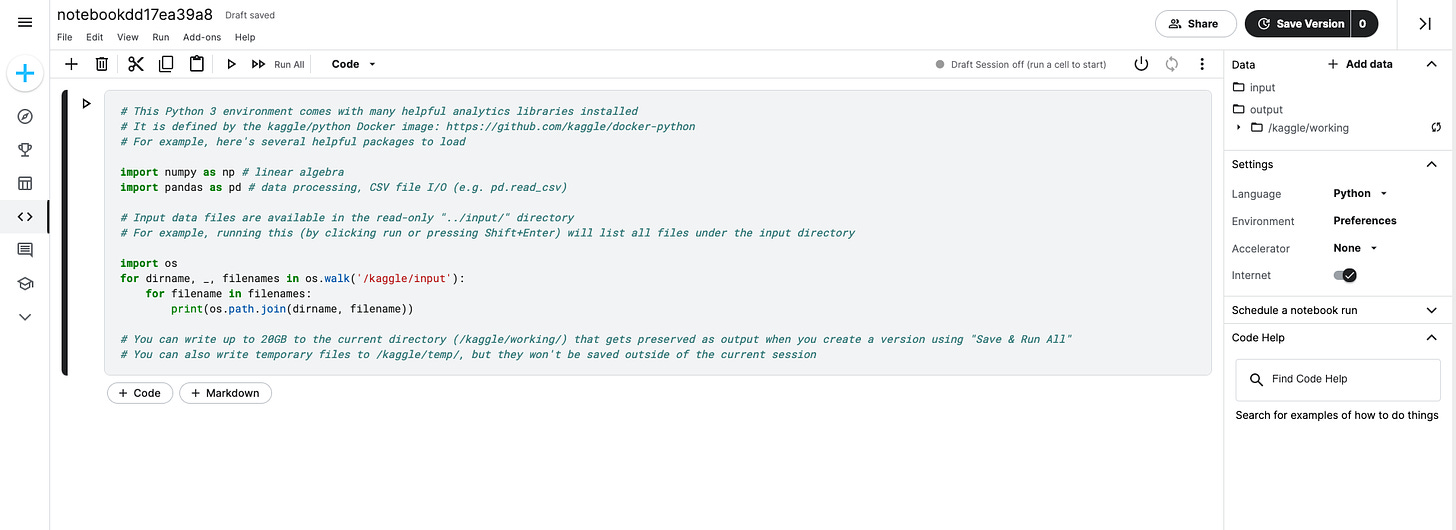

Jupyter Notebooks walked so everyone else could run

The “Notebook” is now functionality every programmer expects when starting a new project. Take a scroll through the following screenshots. Jupyter Notebooks are now embedded in, or the inspiration for, products in major platforms like Databricks, Datarobot, and Kaggle. The notebook interface is easy to understand, and makes programming on a new platform a familiar setting for data scientists.

The notebook is an exciting, foundational product that has supercharged programmers to build and discover some epic stuff. Thank you Jupyter!

This week’s quick bites

Some friends said they liked the end of last week’s newsletter, so we’ll make this a regular of my top finds from the week.

Unless you live under a rock and didn’t see that Sam Altman was ousted as CEO of OpenAI in incredibly dramatic fashion, as the board cited lack of trust in his communications. Mira Murati, OpenAI CTO, is stepping up as interim CEO. Greg Brockman, cofounder and President, quit soon after the announcement. This is going to be a tech hot topic for a while and is an HBO series in the making.

Following the OpenAI announcement, a quick piece on board control by Ed Sims

The State of Silicon and the GPU Poors with Dylan Patel of SemiAnalysis — discussing the incoming wave of GPU supply, FLOPs demand, and transformers.

An April 2022 episode of Michael Lewis on the SmartLess podcast, so naturally I started reading the Big Short this week

Nathan Fielder and Emma Stone’s much awaited series The Curse came out last week, and the second episode will be out by the time you’re reading this. No spoilers, but the first episode was so weird.

Everton, the Premier League football club, was docked a record breaking 10 points due to breaching the league’s economic rules.

I used to believe that I was smart, well-educated, with a pretty high IQ. This episode blows my mind and leaves me wondering if any of that is true. I think it started six years ago, when I sat in on your intro computer science course and despite having taken a basic computing course in college and having written the entire APL code for the 'game' used to collect data for my doctoral dissertation, I realized then that the world of programming, coding and -- eventually -- artificial intelligence, had passed me by. I greatly admire and will always appreciate what you have done with the opportunities provided you.